In this video we walk you through how to create a controlnet powered workflow, featuring flux dev. and a custom LoRA!

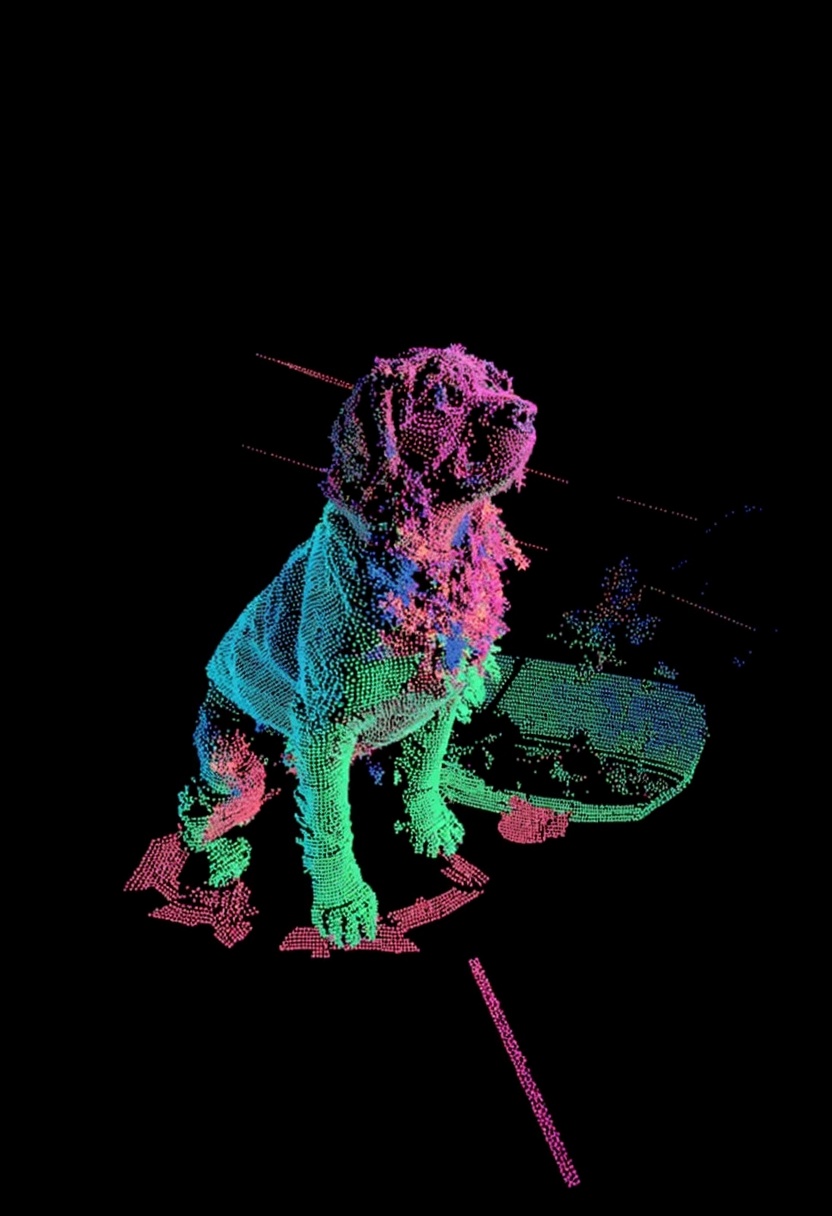

glif - Flux Dev Jasper Dev Depth Map [Lidar Version] by araminta_k

Step by step

- Click Build to start a new Glif project.

- Add an image input block.

- Add a text input block.

- Add a ComfyUI block.

- Copy the provided JSON into the Comfyui block.

- (Optional) Adjust the LoRA input node in the Comfyui block.

- (Optional) Adjust the controlnet strength nodes in the Comfyui block.

- Test and publish!

Code and Content

ComfyUI JSON

{

"3": {

"inputs": {

"seed": 376385039400309,

"steps": 28,

"cfg": 1,

"sampler_name": "dpmpp_2m",

"scheduler": "beta",

"denoise": 1,

"model": [

"78",

0

],

"positive": [

"47",

0

],

"negative": [

"47",

1

],

"latent_image": [

"83",

0

]

},

"class_type": "KSampler",

"_meta": {

"title": "KSampler"

}

},

"6": {

"inputs": {

"text": "{input1}, L1d4r style",

"clip": [

"78",

1

]

},

"class_type": "CLIPTextEncode",

"_meta": {

"title": "CLIP Text Encode (Prompt)"

}

},

"7": {

"inputs": {

"text": "",

"clip": [

"78",

1

]

},

"class_type": "CLIPTextEncode",

"_meta": {

"title": "CLIP Text Encode (Prompt)"

}

},

"8": {

"inputs": {

"samples": [

"3",

0

],

"vae": [

"27",

0

]

},

"class_type": "VAEDecode",

"_meta": {

"title": "VAE Decode"

}

},

"9": {

"inputs": {

"filename_prefix": "ComfyUI",

"images": [

"8",

0

]

},

"class_type": "SaveImage",

"_meta": {

"title": "Save Image"

}

},

"11": {

"inputs": {

"guidance": 4,

"conditioning": [

"6",

0

]

},

"class_type": "FluxGuidance",

"_meta": {

"title": "FluxGuidance"

}

},

"27": {

"inputs": {

"vae_name": "ae.sft"

},

"class_type": "VAELoader",

"_meta": {

"title": "Load VAE"

}

},

"30": {

"inputs": {

"image": "{image-input1}"

},

"class_type": "LoadImage",

"_meta": {

"title": "Load Image"

}

},

"31": {

"inputs": {

"preprocessor": "Zoe-DepthMapPreprocessor",

"resolution": 1024,

"image": [

"75",

0

]

},

"class_type": "AIO_Preprocessor",

"_meta": {

"title": "AIO Aux Preprocessor"

}

},

"47": {

"inputs": {

"strength": 0.6,

"start_percent": 0,

"end_percent": 0.3,

"positive": [

"11",

0

],

"negative": [

"7",

0

],

"control_net": [

"48",

0

],

"image": [

"31",

0

],

"vae": [

"27",

0

]

},

"class_type": "ControlNetApplyAdvanced",

"_meta": {

"title": "Apply ControlNet"

}

},

"48": {

"inputs": {

"control_net_name": "flux/jasperai_depth_flux_dev.safetensors"

},

"class_type": "ControlNetLoader",

"_meta": {

"title": "Load ControlNet Model"

}

},

"72": {

"inputs": {

"unet_name": "flux1-dev-fp8.safetensors",

"weight_dtype": "default"

},

"class_type": "UNETLoader",

"_meta": {

"title": "Load Diffusion Model"

}

},

"73": {

"inputs": {

"clip_name1": "t5xxl_fp8_e4m3fn.safetensors",

"clip_name2": "clip_l.safetensors",

"type": "flux"

},

"class_type": "DualCLIPLoader",

"_meta": {

"title": "DualCLIPLoader"

}

},

"75": {

"inputs": {

"width": 1024,

"height": 1024,

"upscale_method": "nearest-exact",

"keep_proportion": false,

"divisible_by": 2,

"width_input": [

"79",

0

],

"height_input": [

"79",

1

],

"crop": "disabled",

"image": [

"30",

0

]

},

"class_type": "ImageResizeKJ",

"_meta": {

"title": "Resize Image"

}

},

"78": {

"inputs": {

"repo_id": "glif/LiDAR-Vision",

"subfolder": "",

"filename": "Lidar.safetensors",

"strength_model": 1,

"strength_clip": 1,

"model": [

"72",

0

],

"clip": [

"73",

0

]

},

"class_type": "HFHubLoraLoader",

"_meta": {

"title": "Load HF Lora"

}

},

"79": {

"inputs": {

"image": [

"30",

0

]

},

"class_type": "SDXLAspectRatio",

"_meta": {

"title": "Image to SDXL compatible WH"

}

},

"83": {

"inputs": {

"width": [

"79",

0

],

"height": [

"79",

1

],

"batch_size": 1

},

"class_type": "EmptyLatentImage",

"_meta": {

"title": "Empty Latent Image"

}

}

}